Why AI Regulation is About to Get Messy

I kept hearing the same AI arguments everywhere — and something wasn’t adding up.

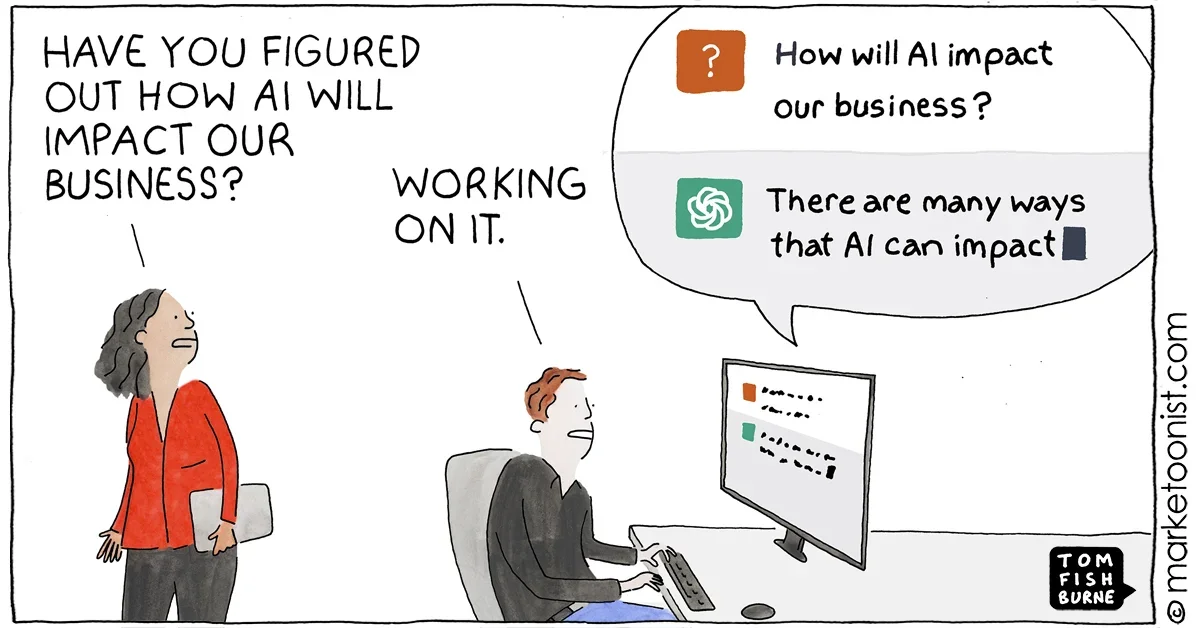

Tom Fishburne, Marketoonist.com

TLDR:

AI isn’t the real issue. Responsibility is. Big companies can absorb audits and ambiguity. Small businesses and creators can’t. Until ownership and accountability are clear, confusion will keep pace with the technology.

Depending on whom you ask, artificial intelligence is either revolutionizing creativity or hollowing it out entirely. It’s accused of replacing artists, defended as a productivity tool, and treated as everything from a thinking partner to a moral failure. What gets lost in all of that noise is a simpler truth: Most people arguing about AI can’t agree on what they’re actually talking about.

Think about it like this: You’re sitting at a family dinner, and your mother says, “I don’t like spicy food.” Half the table nods along, thinking she means hot sauce. The other half starts arguing about ghost pepper. Someone brings up garlic. Before long, everyone’s debating spice levels, but no one’s talking about the same thing anymore.

To define AI, let’s begin with an old favorite tool of ours. Spellcheck. It is a narrow, task-specific form of machine learning designed to recognize patterns in language and suggest corrections. So are grammar tools, autocomplete, and predictive text. When we write scholarly articles, do we credit Microsoft Word for fixing a sentence? Did we cite Clip Art in 6th grade, when we wrote our (I can attest, super cool) fantasy short story for creative writing class? No. Those tools slipped quietly into creative workflows and became invisible.

Then, around 2020, the conversation changed. Generative AI crossed a psychological line. Not because it corrected words better than Microsoft Word, but because it began delivering work that looks finished. That’s where the discomfort starts. Instead, not because the technology is new, but because authorship suddenly feels harder to define.

This is where the conversation usually turns philosophical. Art, after all, has always lived in imperfection. The typo you don’t catch. The wrong word: cynical instead of cylinder. The sentence that almost works, but doesn’t. Those mistakes aren’t flaws; they’re fingerprints. Proof that a human hand was involved.

AI, by contrast, is built to optimize. That’s not a glitch in its design. It's the point. And that’s why fully outsourcing creative work to AI often feels hollow. Something essential disappears when friction disappears.

However, that doesn’t mean AI has no place in creative work. Used as an editor, a brainstorming partner, or a way to clarify dense information, generative AI can function just as assistively as spellcheck ever did. The problem isn’t generation itself. It’s when generation replaces intention.

Which brings us to the real fear is control – and a lack of it, to be exact.

Technology does not make decisions on its own. People do. AI doesn’t decide to replace artists; people decide to use it that way. The anxiety around AI isn’t about intelligence. It's about responsibility.

That’s why large companies are suddenly eager to clarify whether AI was used or not. When studios emphasize traditional pipelines or projects advertise “no AI used,” they’re not making artistic statements so much as drawing legal and reputational boundaries. Big companies already have contracts that account for AI; most independent creators and small businesses don’t. What looks like a pro- or anti-AI stance is often just risk management. Companies aren’t rejecting AI. They’re deciding where they can afford to acknowledge it.

From a risk-management standpoint, as AI regulations become more specific, audits will likely focus less on the final work and more on how it was made. The question won’t be “what did you create?” so much as “can you clearly explain your process?” That’s where things get complicated. Strict definitions tend to create more confusion than clarity. It’s a bit like being asked to prove how you came up with an idea. Not where you typed it, or what tools you used, but the exact moment it formed. Did it happen in the shower? On a walk? Halfway through a conversation you don’t even remember anymore? When people are forced to account for something that was never fully traceable to begin with, they end up spending more time justifying their process than actually doing the work.

Picture twelve-year-old me being asked to explain where a mermaid-vampire romantasy idea came from. I didn’t know then, and I don’t know now. Good luck regulating imagination. And yet, it’s not hard to imagine a future where that question becomes routine for the kids growing up now.

Then there’s a larger argument, and it’s one with real ground to stand on. Some people want AI to stop altogether, which is why they choose to boycott it. That position makes sense, especially for those who see real harm in how the technology is being used right now. But disengagement creates its own problem. It’s like deciding the food system is broken and refusing to support anything that could improve it. If no one invests in better farming practices, stronger supply chains, or clearer regulation, nothing changes.

The same logic applies to AI. Without participation, there is no funding. Without funding, there is no research, no oversight, and no meaningful progress toward ethical or sustainable use. It’s similar to pulling resources from public schools, healthcare systems, or public transit because they aren’t working as intended. Without investing in better programs, care, or infrastructure, the outcome doesn’t improve. Wanting something to stop is understandable. But if the system continues to exist anyway, refusing to engage doesn’t fix it but worse: It only removes your influence over what comes next.

AI isn’t the thing we’re actually arguing about. Responsibility is. Who owns the work. Who carries the risk. Who benefits from efficiency, and who absorbs the uncertainty.

Until those questions are answered clearly, the technology will keep moving forward — and so will the confusion. It will shape how policymakers write rules, how companies prepare for audits, and how small businesses and creators decide whether it’s safe to keep creating at all. Large organizations can absorb the cost of compliance and documentation. Most individuals can’t.

Which leaves one question worth sitting with: if creativity must be justified by logic to be trusted, what happens to art?